In university I’d spend lazy afternoons picking through videogame-related internet forums. It made me feel connected to a community of fellow enthusiasts: helped me digest the latest industry gossip, and develop with grave diligence all my best Videogame Opinions.

People there would often bemoan the presence of ‘bugs’ in the games they purchased. ‘How can the developers get away with this garbage?’ someone would exclaim in mock bewilderment. ‘Do they not bug test their games at all? It’s inexcusable! It’s as if they spent all their time on the graphics, which are shitty anyway,’ and so on. It’s become an undercurrent in videogameland: a wellspring of populist outrage, fit to spice up any review, Let’s Play or livestream.

Back then I would have typed up a furious, factual defence of the game developer I hoped one day to become: first filling up the little ‘quick reply’ box, then copying-and-pasting my growing manuscript into a full-blown Word document. Today, however, I’ve learned these posters’ questions were a sort of rhetorical Trojan Horse. In debating whether some otherwise-perfect game experience has been marred by a shifty behind-the-scenes computer programmer, I’d already accepted two bad assumptions: first that the ‘perfect game experience’ can objectively exist, second that I might purchase that experience in a store. And though the conversation always cloaked itself in fact—this particular game developer, that particular variety of computer glitch—it was never really about facts. Instead it was about feelings, and about status. It was about persuading people that they’d lost something (that, in fact, someone had stolen it from them) when in truth they’d never had it to begin with. It was about all the things advertisers manipulate when they transmute ‘wants’ into ‘needs’.

To understand why videogames contain so many bugs—and why people find this so upsetting—it helps to think about the gradual extermination of all life on the planet Earth.

Geologists use the word ‘Holocene’ (which in Greek means something like ‘the most recent’) to describe our planet’s present geological epoch. It spans a bit more than fifteen thousand years: the entirety of written history, plus a while before that. They used to think of this as an ‘interglacial’ period: a warm moment, all too brief, before the planet’s orbit tilts backward and ice sheets creep once more across the globe. Yet in the ’70s and ’80s it became apparent that something had changed. Gravity was no longer the dominant cosmic force exerting its will upon our climate. The stronger force was now humanity: not our force of intellect, you understand, but rather the raw fact of our crawling across the earth. Where once there had been little except icy grey crags, now there were billions of hydrocarbon-emitting fires. And with fire—as we’re fond of saying in videogameland—came disparity.

Numerous geologists (completely independent of one another, as if this all weren’t upsetting enough) arrived at the same prospective label for the exciting new circumstances into which we’d plunged ourselves: The Anthropocene. Crucially, this term does not describe an epoch ‘controlled’ by humans; we are not masters of it, in the sense that we sit upon some throne and decide how things will be. Our presence is more like the impact from an asteroid, or a tremendous volcanic eruption: the sort of thing our legal system describes as an ‘act of God’. (On this point the scientific community seems at last to agree with the Catholic Church.)

It’s tempting, I think, to extrapolate the fact of our geological impact towards some dark supposition about human agency. I could claim we are biologically incapable of modifying our behaviour, beyond perhaps the scope of a small community; that we have sped for decades towards oblivion because some ‘bug’ in our brain chemistry prevents us from doing otherwise. Would this not, on some level, be a comforting belief? That we cannot stop consuming, no matter how we might try? That we shall consume until the point of absolute exhaustion, felled at last by our tragic, bare humanity?

But then I remember why I stopped posting on videogame forums. There are lots of ways to influence human behaviour; it’s just that sciency-sounding facts have never had much to do with it.

Grandiose, unchecked consumerism is part of a story we’ve told ourselves about ourselves: an undercurrent, flowing fast beneath the questions people ask us every day. Don’t you ‘want it all’, dear consumer? But don’t you also want it now? Why wouldn’t you want both? Why shouldn’t you want them simultaneously? Haven’t you heard that the customer is always right?

In the entirety of the Holocene—but moreso lately—few ideas have proven more destructive than this. It reduces us to the role of an infinitely-naive baby-person whose job is to demand as many things as possible as loudly as they can manage. It does not require our demands to make sense; it does not require them to correspond with the real world in any way (nor with any of the people who live upon it). Our role is merely to desire things, intensely and for reasons we barely understand. The market’s role, in turn, is to disappoint us.

For those of us who work in game development, the crushing truth underlying every keystroke is that the consumer will not get everything they want. They simply can’t; their desires, fuelled as they are by imagination and self image and alarming quantities of Dr Pepper, run tangled and counter to one another. Many malign the Assassin’s Creed franchise for the way its graphical bugs obscure our view of the games’ cutting-edge rendering techniques; yet of course, it’s impossible to occupy the ‘cutting edge’ of rendering technology without rushing your software out the door ahead of all your competitors. By the same token, it’s tragic when a saved-game bug destroys 73 hours of progress into one of Bethesda’s sprawling fantasy adventures; yet it’s impossible to build 73 hours’ worth of sprawling fantasy without writing software so massive that no single person could understand how it all works.

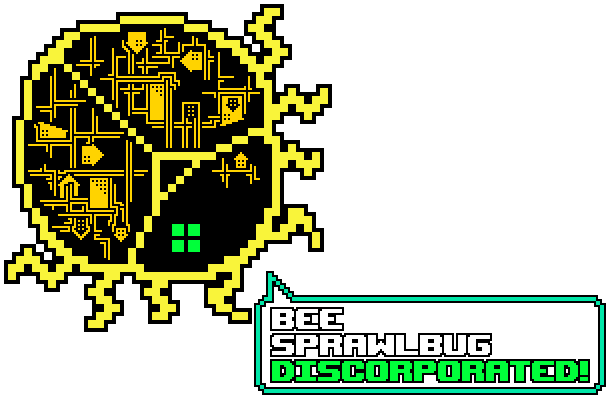

In light of all this, the word ‘bug’ becomes little more than a vapid consumer fantasy. Consider the story it tells us: we envision a swarm of small problems afflicting the surface of some otherwise-stable structure, like silverfish in an apartment complex. This does not strike me as an accurate way to picture videogame software. Try this instead. A contemporary videogame codebase looks more or less like Blighttown from the considerably-buggy Dark Souls series: a tower of rickety software bits built atop ricketier software bits, swaying back and forth in pitch darkness. If you travel in the wrong direction, you’ll fall to your death; if you pull on the wrong structural support, you’ll send the whole thing crashing down into a poison swamp. ‘Bug fixing’, as they say, is not so much about fumigating tiny pests as it is about duelling rat people to the death using a heavy, blunt instrument. You do as much of it as you can bear, praying you don’t accidentally make things worse; and all the while, you’re losing time you could be spending on the ‘cutting edge graphics’ your customers simultaneously demand from you.

So here are two competing stories concerning the morality of our desires. In the first story desire is a hero, double-crossed by the schemes of everyone around it: craftspeople, merchants, lovers and so on. In the second story, desire is the antagonist that pits these people against a ticking clock. The former story leads us merrily towards Armageddon. The latter, however, unites us in our struggle against common foes. That’s what I like about the feeling of making games: racing against time, pushing the work as far as I can, cooperating with both friends and rivals. And it’s why I often prefer playing ‘buggy’ games. I recognize in them the sort of humanity I’m convinced I possess: that which compromises and struggles and grasps, rather than ceaselessly consumes. This is the story I choose to tell myself about myself. I think it’s the only one I can live with.